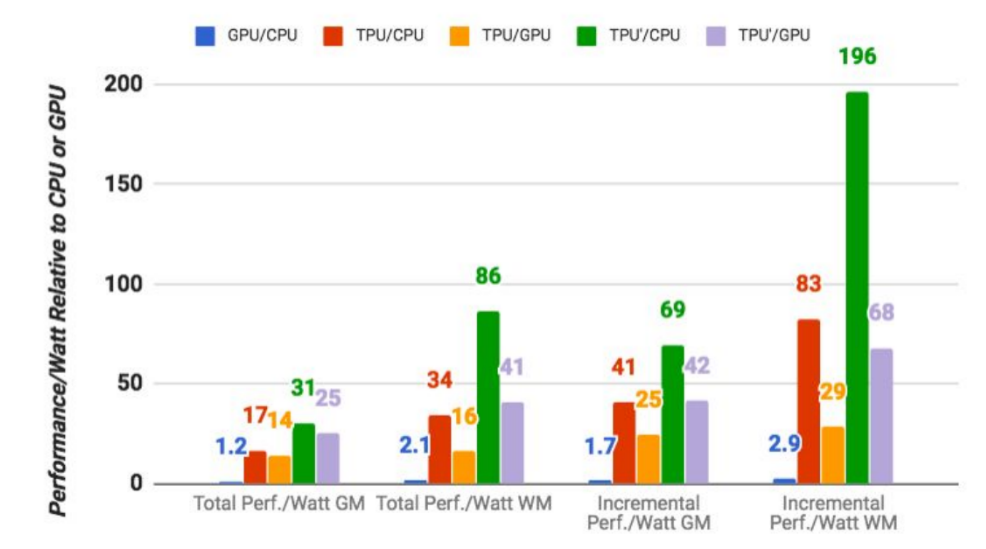

Google says its custom machine learning chips are often 15-30x faster than GPUs and CPUs | TechCrunch

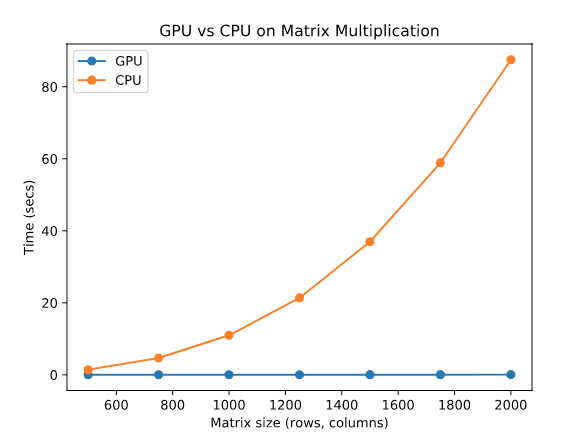

A comparison of floating point performances between Intel CPU, ATI GPU... | Download Scientific Diagram

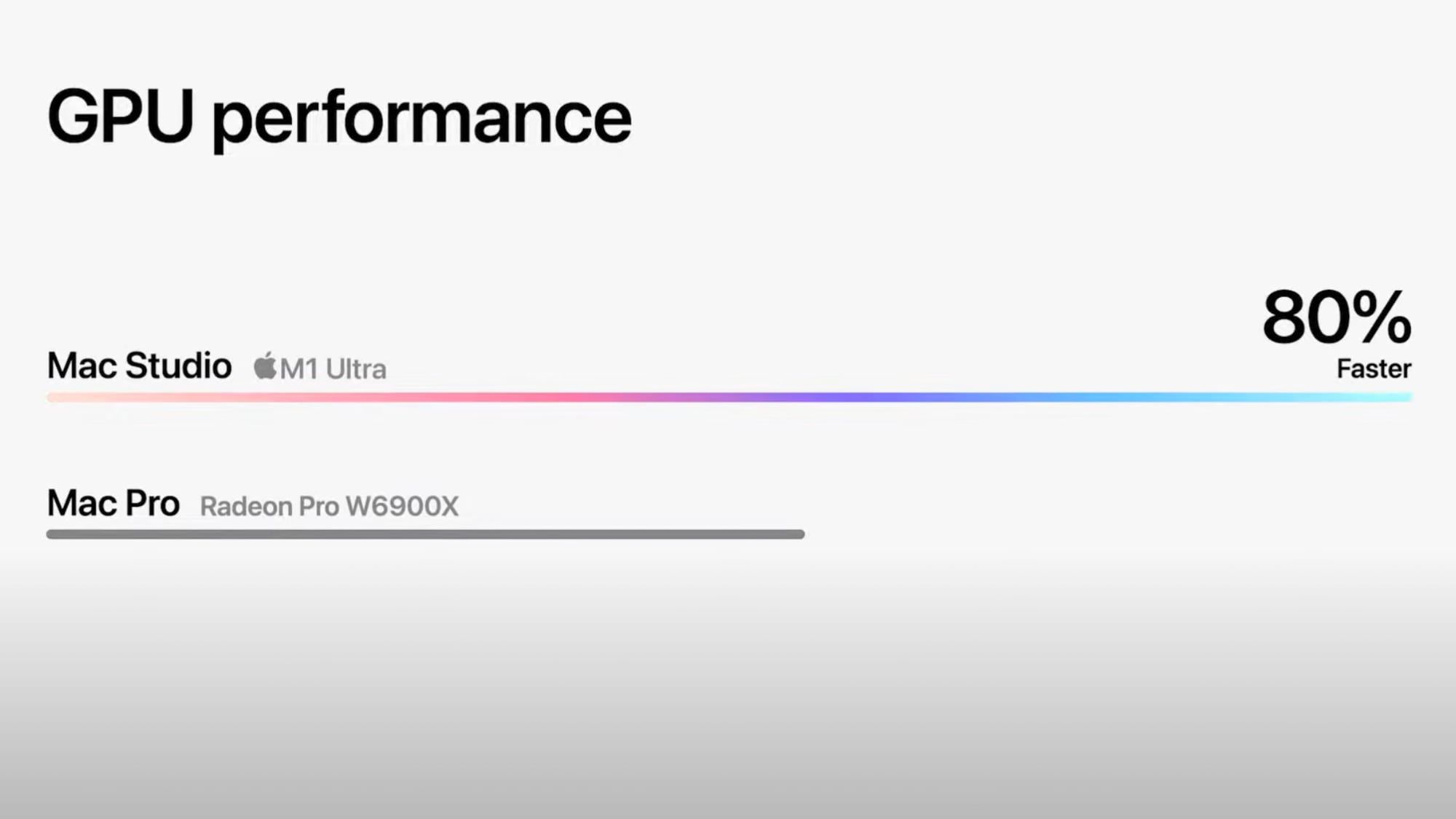

Apple Unveils M1 Ultra SOC: CPU Faster Than Intel 12900K at 100W Less Power, GPU On Par With NVIDIA RTX 3090 at 200W Less Power